Sydney, June 12: Two weeks ago, a modest-looking paper was uploaded to the arXiv preprint server with the unassuming title “On the invariant subspace problem in Hilbert spaces”. The paper is just 13 pages long and its list of references contains only a single entry.

The paper purports to contain the final piece of a jigsaw puzzle that mathematicians have been picking away at for more than half a century: the invariant subspace problem.

Famous open problems often attract ambitious attempts at solutions by interesting characters out to make their name. But such efforts are usually quickly shot down by experts.

However, the author of this short note, Swedish mathematician Per Enflo, is no ambitious up-and-comer. He is almost 80, has made a name for himself solving open problems, and has quite a history with the problem at hand.

Enflo is also one of the great problem-solvers in a field called functional analysis. Aside from his work on the invariant subspace problem, Enflo solved two other major problems – the basis problem and the approximation problem – both of which had remained open for more than 40 years.

By solving the approximation problem, Enflo cracked an equivalent puzzle called Mazur’s goose problem. Polish mathematician Stanislaw Mazur had in 1936 promised a live goose to anyone who solved his problem – and in 1972 he kept his word, presenting the goose to Enflo.

What’s an invariant subspace?

Now we know the main character. But what about the invariant subspace problem itself?

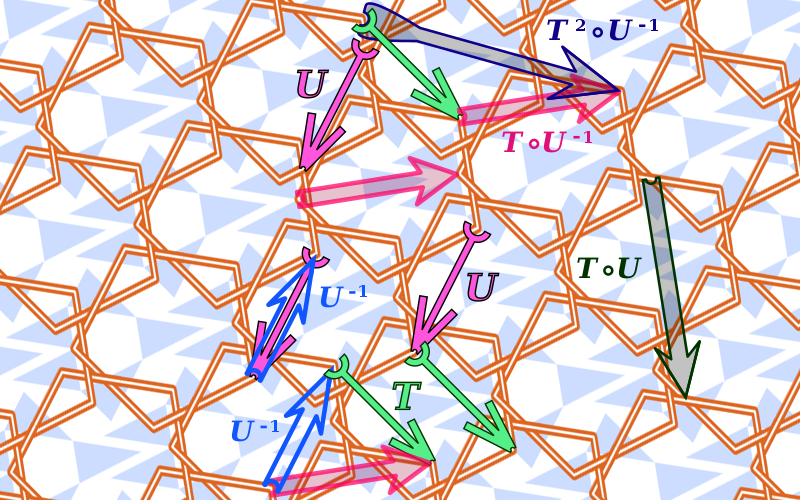

If you’ve ever taken a first-year university course in linear algebra, you will have come across things called vectors, matrices and eigenvectors. If you haven’t, we can think of a vector as an arrow with a length and a direction, living in a particular vector space. (There are lots of different vector spaces with different numbers of dimensions and various rules.)

A matrix is something that can transform a vector, by changing the direction and/or length of the line. If a particular matrix only transforms the length of a particular vector (meaning the direction is either the same or flipped in the opposite direction), we call the vector an eigenvector of the matrix.

Another way to think about this is to say that the matrix transforms the eigenvectors (and any lines parallel to them) back onto themselves: these lines are invariant for this matrix. Taken together, we call these lines invariant subspaces of the matrix.

Eigenvectors and invariant subspaces are also of interest beyond just mathematics – to take one example, it has been said that Google owes its success to “the $25 billion eigenvector”.

What about spaces with an infinite number of dimensions?

So that’s an invariant subspace. The invariant subspace problem is a little more complicated: it is about spaces with an infinite number of dimensions, and it asks whether every linear operator (the equivalent of a matrix) in those spaces must have an invariant subspace.

(AGENCIES)